Sequencing Intro |

August 29th, 2022 |

| bio |

The biological world primarily stores information with nucleic acids. These are series of nucleotides, often called bases: A, C, G, and T. For example, a strand of nucleotides could look like:

GGCTGAGACAGTGCCCAGATCGTTACACCATTCGT

The two main kinds of nucleic acid are DNA and RNA. They differ in a few ways, but from a computational perspective they're very similar. Physical RNA will have U instead of T, though in sequencing data you'll often see it with with T anyway.

Each base has a complement: A bonds with T, and G with C. A nucleic acid that comprises two bonded strands is called double stranded. Each base in one strand will be bonded to its complement:

GGCTGAGACAGTGCCCAGATCGTTACACCATTCGT ||||||||||||||||||||||||||||||||||| CCGACTCTGTCACGGGTCTAGCAATGTGGTAAGCA

This is the famous double helix and makes for a more stable structure than single stranded nucleic acid.

Going from a physical nucleic acid to a sequence on a computer is sequencing, and the reverse is synthesis. I'm only going to talk about the former; I haven't learned much about the latter.

The most common sequencing method today is Next Generation Sequencing, commonly called Illumina sequencing after the main vendor. Bases are dyed and the machine reads their colors. The output of sequencing is a large number of short reads. Each read is a sequence of 50-300 bases, usually around 150. In setting up the sequencing run you choose how many bases to read, and different applications will make the most sense with different lengths. Accuracy drops off as you read farther along the strand. Note the lengths we're talking about are way less than the length of a full nucleic acid, which is generally at least thousands of bases. Not getting the full picture is a big downside of this kind of sequencing.

Let's get some real data to play with. When people publish a paper that depends on sequencing they generally upload their raw data to the NIH's National Center for Biotechnology Information (NCBI). Here's a paper I've been looking at recently, which sequenced wastewater: RNA Viromics of Southern California Wastewater and Detection of SARS-CoV-2 Single-Nucleotide Variants. If you look down to the "Data availability" section, you'll see:

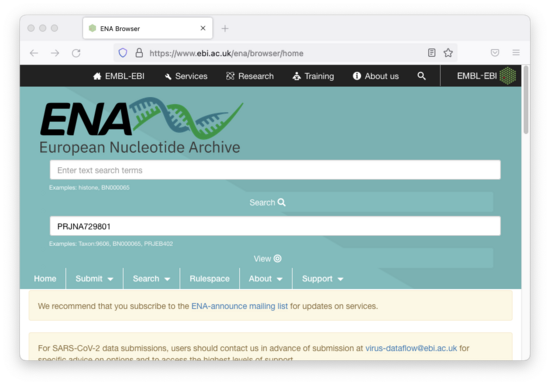

Raw sequencing data have been deposited on the NCBI Sequence Read Archive under accession number PRJNA729801, and representative code can be found at https://github.com/jasonarothman/wastewater_viromics_sarscov2.The GitHub link is helpful for getting metadata (what does each sample represent?) and understanding how they processed it (what tools did they use and how?), but for now we're looking for sequencing data. The accession number is "PRJNA729801", and while we could click through and download it from the NCBI, the user interface on the European mirror (European Nucleotide Archive) is much better. We go to their landing page and enter the accession number:

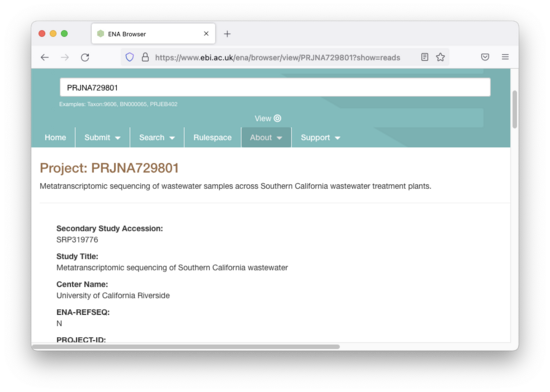

This takes us to a page that describes the data:

We want to sanity check the title to make sure we didn't end up with the wrong data set, and "Metatranscriptomic sequencing of Southern California wastewater" sounds about right.

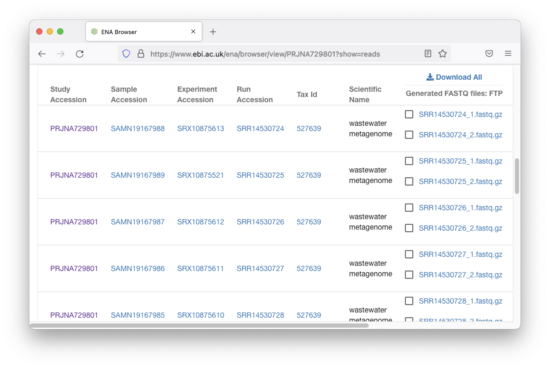

Scrolling down there are links:

We could download all of this data, but it would be about 80GB

compressed. For now, let's just download a single

fastq.gz file, at ~150MB: SRR14530724_1.fastq.gz.

These files are generally both very large and very repetitive, so they're a natural candidate for compression. The most common option is gzip, and that's what they've used here. Let's start by decompressing it:

$ gunzip SRR14530724_1.fastq.gz

Now we can look at it:

$ head -n 4 SRR14530724_1.fastq @SRR14530724.1 1/1 GTTGTTATCCTGCGCGGCGACGCCAGCCTTGCTCAATTGCTCGAGCAGGGC CTGTCTCTTATACACATCTCCGAGCCCACGAGACAGGTCAGATAATCTCGT ATGCCGTCTTCTGCTTGAAAAGGGGGGGGGGGGGGGGGGGGGGGGGGGG + FFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF F:F:FFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF,FFF FFF:FF,FFF::FF::F::::,FFFFFFFFFFFFFFFFFFFFFFFFFFFThis file format is called FASTQ and right now we're looking at a single read from the file. The line starting with "@" gives the id for this sequence, then there's the sequence itself. A line starting with "+" indicates the end of the sequence, and then there's the quality score which we'll talk about in a bit.

I can see that there are 2.7M reads in this file:

$ grep -c ^@ SRR14530724_1.fastq 2737872

You'll notice that this read ends in a long string of 'G's, and this is actually very common in the data:

$ grep -c 'GGGGGGGG$' SRR14530724_1.fastq 840839 # 31% of reads

This is called Poly-G and comes from the particular chemistry

this sequencer uses. It identifies bases by dying A and T one color

(green), and A and C a different color (red). This means A will be

yellow (green+red light), T will be green, C will be red, and G will

be black (no light). The sequencer can't distinguish "there are no

bases" from "there are bases but they're all G and didn't pick up any

dye". When a sequence is shorter than expected it gets a Poly-G tail.

You generally trim these tails after sequencing as part of a general

quality control step; we'll just do sed 's/GGGGGGGG*$//'

for now.

The sequencer has some information about how confident it is: sometimes it sees a single clear color, other times there's a bit of another color mixed in. This is what the quality score encodes. It's the same length as the sequence, and they correspond character for character.

You convert the letter to a numeric value by taking the ASCII value of

the character and subtracting 33, the ASCII value of the first

non-whitespace printable character ('!'). To convert

this to an error probability you multiply by -0.1, take that power of

ten. For example, 'F' is ASCII 70, and 10^(-.1*(70-33))

is 0.02% chance of error, or a 99.98% chance of being accurate. An

increase of ten ASCII positions indicates a 10x higher accuracy.

This sequencer uses only a few different quality values:

| Phred character | Numeric Value | Accuracy |

|---|---|---|

| F | 37 | 99.98% |

| : | 25 | 99.7% |

| , | 11 | 92% |

| # | 2 | 37% |

Looking at this one sequencing run I see:

$ cat SRR14530724_1.fastq

| grep FF # quality line only

| sed 's/\(.\)/\1\n/g' # one char per line

| grep . # ignore whitespace

| head -n 10000000 # sample the data

| sort | uniq -c # count types

257 # # ~0%

411192 , # 4%

506844 : # 5%

9081707 F # 91%

Let's pull out just a short section of the sequence and its corresponding quality scores:

CTGTCTCT F:F:FFFF

This is saying it's 99.7% confident about those first two "T"s, and 99.98% confident about the other calls.

In cases where you don't care about quality scores you'll often use the "FASTA" file format instead:

>SRR14530724.1 1/1 GTTGTTATCCTGCGCGGCGACGCCAGCCTTGCTCAATTGCTCGAGCAGGGC CTGTCTCTTATACACATCTCCGAGCCCACGAGACAGGTCAGATAATCTCGT ATGCCGTCTTCTGCTTGAAAAGGGGGGGGGGGGGGGGGGGGGGGGGGGG >SRR14530724.2 2/1 CATTTTCGACGGCGTCGATGTACAAAGGTTATACCATAGTAAGTCCGAAGC TACAGGCTTATGACACCGCAGAGTCAATGTATTCCGGTGACAATGTACTGA TGTACAGTGGGACTGACACTGTCTCTTATACACATCTCCGAGCCCACGAThe ">" marks the beginning of a read, and everything after it is the id for that sequence. For example, the first read in this file is "SRR14530903.1 1/2". All lines until the next ">" are the sequence for that read.

You'll notice that some of the files end in _1.fastq.gz

and some end in _2.fastq.gz, and each pair has the same

number of reads:

$ grep -c ^@ SRR14530724_*.fastq SRR14530724_1.fastq:2737872 SRR14530724_2.fastq:2737872This is because this particular data set used paired-end sequencing. The idea is you simultaneously sequence a section at the beginning (the forward read) and a section at the end (the reverse read) of each fragment, putting them in the

_1 and _2 files respectively. There's an

unsequenced gap between them, and you know about how long it is based

on how you chopped up your fragments before sequencing. This is

useful in figuring out how these fragments can be assembled

into full genetic sequences.

All of this so far has been describing short Illumina-style reads, but there's another kind of sequencing that's becoming increasingly popular, called Nanopore sequencing. The nucleic acid is fed through a tiny hole in a chip, and different sequences of bases will generate different electrical signals as they pass through. Converting these signals to a string of ACTG and producing a FASTQ file is basecalling and you generally use a neural network running on a GPU. This produces much longer reads than Illumina sequencing, because you can feed through an arbitrarily long nucleic acid. Read of tens of thousands of bases are common, and reads in the millions are possible. Long reads are helpful for many things, especially if you're working with a mixture of nucleic acids pulled from a natural environment like skin, saliva, wastewater, etc. (metagenomics, metatranscriptomics). At this point Nanopore is approximately cost competitive with Illumina, but still has a much higher error rate.

Let's stop things here, since this is long enough already. Other things I might write about at some point: assembly, qPCR, amplicon sequencing, reverse complements, tooling, k-mers. Happy to answer questions!

- Prototyping in C

- Sequencing Intro II: Adapters

- Case Rates to Sequencing Reads

- Prevalence to Relative Abundance

- Estimating Norovirus Prevalence

- Simple Terminal Colors

- What is a Sequencing Read?

Comment via: facebook, lesswrong, substack