Real Time Bass Whistling |

July 2nd, 2019 |

| contra, music, whistling |

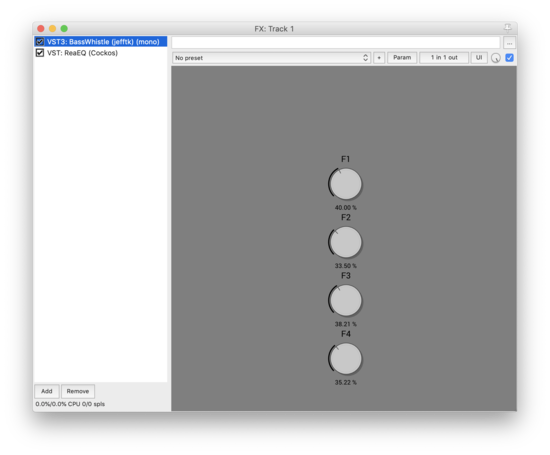

Here's what I have so far: mp3. The left channel is the bass, while the right is the input whistling. If you have a DAW on a Mac you could try the VST3.

How did I make this? I initially thought I should use Imitone, since that what it's built to do. While it's pretty neat, and does some clever things with interpreting vocals, I couldn't get the latency low enough there to be pleasant even with whistling.

I decided to try writing something myself. The first thing I figured out was that you'd normally do this with a Fourier transform. The idea is, you give it a bunch of samples, it tells you what frequencies are there. That's going to add latency, though, since the more precision you want on frequency the less precision you get on time.

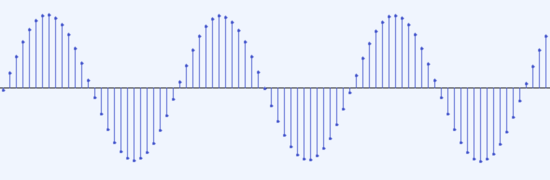

Whistling is very close to a sine wave, so we can do better than that. Here's what our signal looks like:

Instead of complex processing, we can just count how many samples happen between zero crossings. Specifically, between one positive to negative transition and the next positive to negative transition. For example, in this case I count 27 samples in a cycle, and we're sampling at 44.1kHz, so that's 1633Hz.

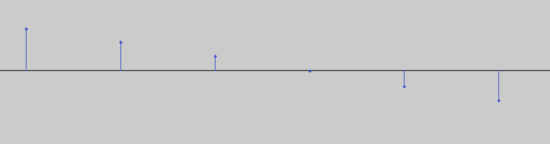

This is fast, but it's not ideal. Consider these two zero crossings:

In the first case, the signal crosses zero very close to the last positive sample, while in the second the crossing is very close to the first negative sample. If we just count samples we can be off by a sample's worth. If this makes us count 26 samples instead of 27, that's 1696Hz instead of 1633Hz, almost a half step.

If we figure that the signal is approximately linear where it crosses zero, which is a good assumption for sine waves, then we can adjust by looking at last positive and first negative values. The larger the first negative value is compared to the last positive one, the farther back the zero crossing was. Mathematically, if n is the first negative value, p is the first positive value, and a is the fraction of a sample by which we should shift back our zero crossing, we have:

p

\

----------

\

\

\

\

\

n

+--a--+

and so:

|n|

a = ---------

|n| + |p|

This makes our pitch estimates a lot more accurate.

I tried playing around with generating midi and controlling virtual instruments, but synthesizing a sound directly felt more natural. The basic logic is:

val = sine(τ * fraction_of_period)That is, if we're 0% of the way from zero crossing to zero crossing, then we emit zero, 25% we emit one, 50% zero again, 75% negative one, and 100% back to zero.

If we use (adjusted) samples per zero crossing as our fraction of a period we get a sine wave at the same pitch we're whistling. To bring it down N octaves we need to use a period that's 2^N times as long.

I'm currently mixing up a signal that's got bits of the original signal down five octaves, down four, down three and a half, and down three. This is kind of arbitrary and I should play with it more. Organ drawbars would be a good interface here.

I had been prototyping in python up to this point, but multiple sine computations for every sample was too much for it to keep up, so I ported things over to C here.

I tried for volume I'm using the average energy of the previous input period to determine the energy for the next output period, but currently I'm just using constant volume.

This mostly works, but can give crackles and unpleasant high pitched noises when the input frequency changes. The problem is that it suddenly jumps from one point on the sine wave to one that isn't very close.

I was able to mostly fix this by rethinking my approach so everything changed smoothly. I track "how far am I along the wave" and "what's my target wavelength in samples" and slide the wavelength toward the target. Similarly, instead of the wave coming in and out at full volume, I fade it.

There are still some crackles, and a low pass filter would work well. For now I'm doing some really simple averaging:

out = (out + 15*last_out) / 16;This means that as long as each output sample is close to the ones near the signal is fine, but large fast shifts are heavily damped. This is basically a low-pass filter, which is fine since I'm trying to emit bass notes with periods in the 1000 to 500 sample (45 to 90Hz) range.

It's not a very good filter, though. Instead, I think I would just not do this averaging in my code and put a proper low pass filter afterwards.

I used iPlug2 to get it into Reaper and I'm pretty happy with it. Definitely needs a low pass filter, though.

The code is on github and uses PortAudio though I've only tried it on mac. I also have the VST in a separate iPlug2 repo.

Comment via: facebook, substack