Design Testing |

November 19th, 2011 |

| experiment, tech, work |

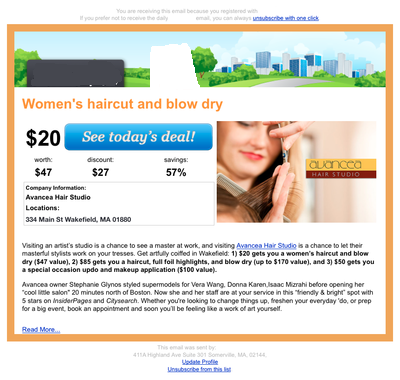

One humbling aspect is that I've realized I'm not very good at predicting whether a change will help. None of us are. When we test new designs, sometimes they work well and other times they don't. [2] For an example of this, consider two redesigns from the early days of our daily deals website. The first is an email design, the second is a site design:

Old:

New:

One of these was a 14% improvement, the other a 27% degradation. Can you tell which was which?

Old:

New:

[1] Not all websites have an obvious metric for "performs better".

For example, how does wikipedia know if a site change improves things

for their users? (More edits? Better edits? More time reading? Less

time?) We're generally trying to sell things, however, so we can

mostly just look at the fraction of users who advance to the next step

in the sales process.

[2] This really shows the value of testing: if we just made every change we thought was good, we wouldn't improve anywhere near as much as just adopting the changes that help.

Comment via: google plus, facebook, substack